Far more and additional merchandise and services are taking edge of the modeling and prediction capabilities of AI. This posting presents the nvidia-docker resource for integrating AI (Synthetic Intelligence) software program bricks into a microservice architecture. The major advantage explored in this article is the use of the host system’s GPU (Graphical Processing Unit) means to accelerate various containerized AI apps.

To comprehend the usefulness of nvidia-docker, we will start out by describing what kind of AI can gain from GPU acceleration. Secondly we will existing how to employ the nvidia-docker tool. Ultimately, we will describe what instruments are offered to use GPU acceleration in your programs and how to use them.

Why making use of GPUs in AI applications?

In the field of artificial intelligence, we have two primary subfields that are used: device understanding and deep discovering. The latter is section of a larger sized loved ones of equipment learning techniques primarily based on synthetic neural networks.

In the context of deep understanding, the place operations are fundamentally matrix multiplications, GPUs are extra productive than CPUs (Central Processing Units). This is why the use of GPUs has grown in latest yrs. Certainly, GPUs are viewed as as the coronary heart of deep understanding because of their massively parallel architecture.

However, GPUs can’t execute just any program. Certainly, they use a distinct language (CUDA for NVIDIA) to get advantage of their architecture. So, how to use and communicate with GPUs from your applications?

The NVIDIA CUDA engineering

NVIDIA CUDA (Compute Unified System Architecture) is a parallel computing architecture blended with an API for programming GPUs. CUDA interprets software code into an instruction set that GPUs can execute.

A CUDA SDK and libraries this sort of as cuBLAS (Primary Linear Algebra Subroutines) and cuDNN (Deep Neural Community) have been produced to connect effortlessly and competently with a GPU. CUDA is accessible in C, C++ and Fortran. There are wrappers for other languages such as Java, Python and R. For illustration, deep mastering libraries like TensorFlow and Keras are based on these systems.

Why using nvidia-docker?

Nvidia-docker addresses the wants of developers who want to increase AI performance to their applications, containerize them and deploy them on servers driven by NVIDIA GPUs.

The objective is to established up an architecture that will allow the growth and deployment of deep understanding designs in products and services accessible by way of an API. Therefore, the utilization amount of GPU resources is optimized by generating them obtainable to multiple software occasions.

In addition, we reward from the rewards of containerized environments:

- Isolation of cases of every single AI product.

- Colocation of numerous models with their precise dependencies.

- Colocation of the same design beneath many versions.

- Constant deployment of types.

- Model overall performance monitoring.

Natively, working with a GPU in a container involves putting in CUDA in the container and providing privileges to access the device. With this in intellect, the nvidia-docker instrument has been formulated, letting NVIDIA GPU equipment to be uncovered in containers in an isolated and secure way.

At the time of writing this post, the hottest version of nvidia-docker is v2. This model differs greatly from v1 in the adhering to means:

- Model 1: Nvidia-docker is carried out as an overlay to Docker. That is, to generate the container you had to use nvidia-docker (Ex:

nvidia-docker operate ...) which performs the actions (amid other individuals the creation of volumes) allowing to see the GPU gadgets in the container. - Model 2: The deployment is simplified with the substitute of Docker volumes by the use of Docker runtimes. Indeed, to launch a container, it is now vital to use the NVIDIA runtime by way of Docker (Ex:

docker run --runtime nvidia ...)

Take note that because of to their diverse architecture, the two versions are not compatible. An application prepared in v1 need to be rewritten for v2.

Location up nvidia-docker

The necessary components to use nvidia-docker are:

- A container runtime.

- An out there GPU.

- The NVIDIA Container Toolkit (most important component of nvidia-docker).

Stipulations

Docker

A container runtime is required to run the NVIDIA Container Toolkit. Docker is the recommended runtime, but Podman and containerd are also supported.

The official documentation offers the installation process of Docker.

Driver NVIDIA

Drivers are essential to use a GPU device. In the situation of NVIDIA GPUs, the drivers corresponding to a specified OS can be obtained from the NVIDIA driver down load website page, by filling in the details on the GPU design.

The set up of the motorists is completed by using the executable. For Linux, use the subsequent commands by changing the identify of the downloaded file:

chmod +x NVIDIA-Linux-x86_64-470.94.operate

./NVIDIA-Linux-x86_64-470.94.runReboot the host equipment at the close of the installation to just take into account the installed drivers.

Installing nvidia-docker

Nvidia-docker is obtainable on the GitHub project website page. To put in it, comply with the installation handbook relying on your server and architecture specifics.

We now have an infrastructure that allows us to have isolated environments giving obtain to GPU sources. To use GPU acceleration in applications, a number of resources have been developed by NVIDIA (non-exhaustive checklist):

- CUDA Toolkit: a set of resources for acquiring program/plans that can complete computations applying both CPU, RAM, and GPU. It can be made use of on x86, Arm and Electricity platforms.

- NVIDIA cuDNN: a library of primitives to accelerate deep discovering networks and improve GPU functionality for major frameworks these types of as Tensorflow and Keras.

- NVIDIA cuBLAS: a library of GPU accelerated linear algebra subroutines.

By applying these applications in software code, AI and linear algebra jobs are accelerated. With the GPUs now obvious, the application is ready to ship the information and operations to be processed on the GPU.

The CUDA Toolkit is the lowest level possibility. It delivers the most handle (memory and directions) to build custom made apps. Libraries present an abstraction of CUDA performance. They enable you to target on the software improvement relatively than the CUDA implementation.

As soon as all these aspects are executed, the architecture using the nvidia-docker provider is completely ready to use.

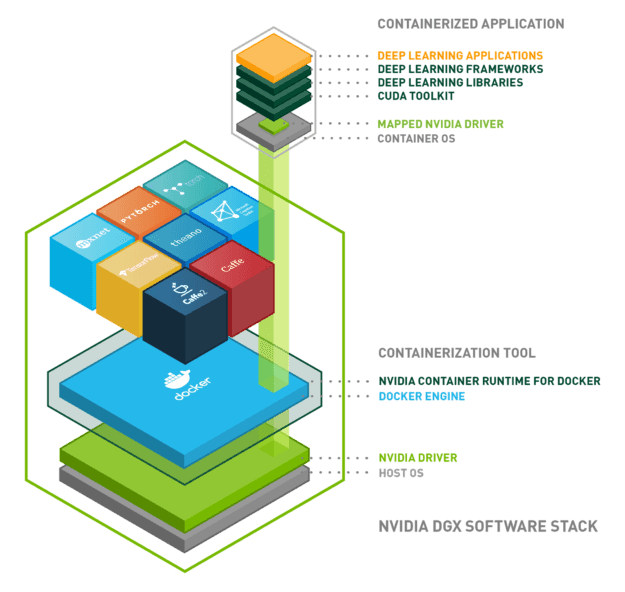

Below is a diagram to summarize every little thing we have seen:

Summary

We have set up an architecture enabling the use of GPU means from our apps in isolated environments. To summarize, the architecture is composed of the following bricks:

- Operating procedure: Linux, Windows …

- Docker: isolation of the environment using Linux containers

- NVIDIA driver: installation of the driver for the components in dilemma

- NVIDIA container runtime: orchestration of the preceding three

- Apps on Docker container:

- CUDA

- cuDNN

- cuBLAS

- Tensorflow/Keras

NVIDIA proceeds to establish tools and libraries all around AI technologies, with the target of creating itself as a leader. Other technologies may well enhance nvidia-docker or may well be much more suited than nvidia-docker relying on the use circumstance.

More Stories

Boost Your Business with Expert SEO Services from Chain Reaction in Jordan

I Used to Struggle With Where to Send My Kids to School. Now I Struggle With Sending Them at All.

The 7 things you need to have your own website